Until the 1950s, CNC machines operated primarily on data derived from punched cards, which were still largely manufactured through painstaking manual processes. The turning point in the evolution to CNC was when the cards were replaced by computer controls, which directly reflected the development of computer technology, as well as computer-aided design (CAD) and computer-aided manufacturing (CAM) programs. Machining became one of the first applications of modern computer technology.

Although the Analytical Engine developed by Charles Babbage in the mid-1800s is considered the first computer in the modern sense, the Massachusetts Institute of Technology’s (MIT) real-time computer Whirlwind I (also born in the Servomechanics Laboratory) was the world’s first computer to compute in parallel and use magnetic core memory (shown below). The team was able to use the machine to code computer-controlled production of perforated tape. This original mainframe used about 5,000 vacuum tubes and weighed about 20,000 pounds.

The slow progress of computer development during this period was part of the problem at the time. In addition, the people trying to sell the idea didn’t really understand manufacturing – they were just computer experts. The NC concept was so foreign to manufacturers at the time, and the technology developed so slowly at the time that the U.S. Army itself eventually had to build 120 NC machines and lease them out to various manufacturers to begin to popularize their use.

- Mid-1950s: G-code, the most widely used NC programming language, was born at MIT’s Servo Mechanisms Laboratory, and G-code was used to tell computerized machine tools how to make something. Instructions are sent to the machine controller, which then tells the motor how fast to move and what path to follow.

- 1956: The Air Force proposes the creation of a general programming language for CNC. A new MIT research department, headed by Doug Ross and named the Computer Applications Group, begins working on the proposal, developing what would become known as the programming language Automatically Programmed Tool (APT).

- 1957: A division of the Aircraft Industries Association and the Air Force collaborates with MIT to standardize the work of APT and create the first official CNC machine. Created before the invention of graphical interfaces and FORTRAN, APT used only text to transfer geometry and toolpaths to a numerical control (NC) machine. (Later versions were written in FORTRAN, and APT was eventually released in the civilian sector.

- 1957: While working at General Electric, American computer scientist Patrick J. Hanratty develops and releases an early commercial CNC programming language called Pronto, which lays the foundation for future CAD programs and earns him the unofficial title of “Father of CAD/CAM”.

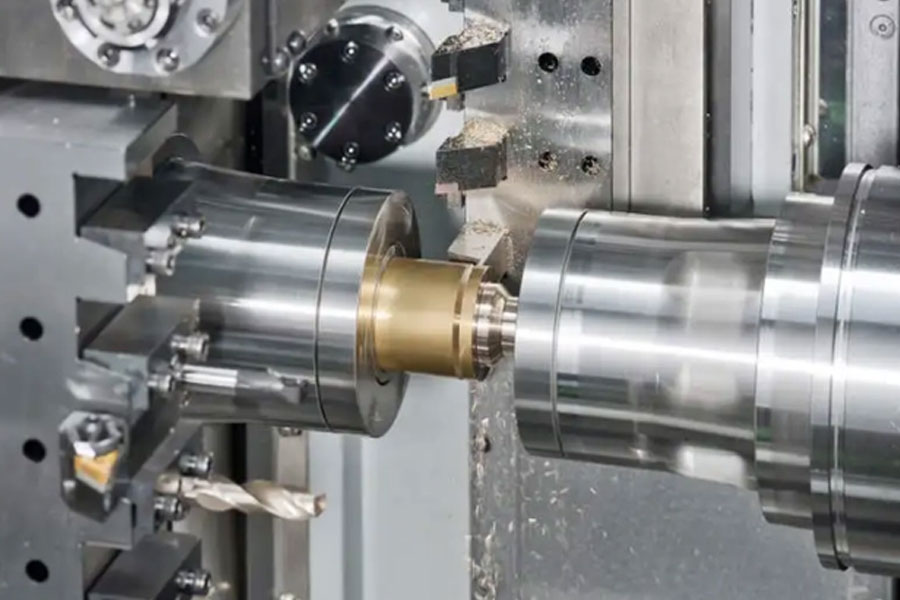

- “On March 11, 1958, a new era in manufacturing production was born. For the first time in the history of manufacturing, several large, electronically controlled production machines operated simultaneously as an integrated production line. These machines, virtually unattended, could drill, bore, mill, and pass unrelated parts between machines.

- 1959: MIT team holds a press conference to demonstrate their newly developed CNC machine.

- 1959: The Air Force signs a one-year contract with MIT’s Electronic Systems Laboratory to develop a “computer-aided design program. The resulting Automated Engineering Design (AED) for systems was released into the public domain in 1965.

- 1959: General Motors (GM) began working on what became known as Design for Computer Augmentation (DAC-1), one of the first graphical CAD systems. The following year, they brought in IBM as a collaborator. Drawings could be scanned into the system, which digitized them and allowed them to be modified. Other software could then convert the lines into 3D shapes and export them to APT to be sent to the milling machine. DAC-1 went into production in 1963 and was publicly unveiled in 1964.

- 1962: The Electronic Drafting Machine (EDM), the first commercial graphics CAD system developed by U.S. defense contractor Itek, is introduced. Acquired by mainframe and supercomputer company Control Data Corporation and renamed Digigraphics, it was initially used by companies such as Lockheed to manufacture production parts for the C-5 Galaxy military transport aircraft, demonstrating the first example of an end-to-end CAD/CNC production system.

- Time magazine at the time wrote about EDM in March 1962 and noted: The operator’s design passes through a console into an inexpensive computer that solves problems and stores the answers in digital form and on microfiche in its memory banks. By simply pushing a button and sketching with a light pen, the engineer could enter into a running dialogue with the EDM, recalling any of his earlier drawings to the screen in a millisecond and changing their lines and curves at will.

- At the time mechanical and electrical designers needed a tool to speed up the arduous and time-consuming work they often performed. To meet this need, Ivan E. Sutherland of MIT’s Department of Electrical Engineering created a system that made digital computers an active partner for designers.

- CNC machines gain traction and popularity

- In the mid-1960s, the advent of affordable minicomputers changed the game for the industry. Thanks to new transistor and core memory technology, these powerful machines took up much less space than the room-sized mainframes used until then.

- Mini-computers, also known at the time as mid-range computers, naturally carried a more affordable price tag as well, freeing them from their former corporate or military limitations and putting the potential for accuracy and reliable repeatability in the hands of small, corporate companies.

- By contrast, minicomputers are simple 8-bit, single-user machines running simple operating systems such as MS-DOS, while ultra-computers are 16- or 32-bit. Pioneering companies include DEC, Data General, and Hewlett-Packard (HP) (now calling their former minicomputers, such as the HP3000, “servers”).

- In the early 1970s, slow economic growth and rising employment costs made CNC machining seem like a good, cost-effective solution, and the demand for low-cost NC system machines increased. While U.S. researchers focused on high-end industries such as software and aerospace, Germany (joined by Japan in the 1980s) focused on the low-cost market and surpassed the U.S. in machine sales. However, there was a range of U.S. CAD companies and suppliers at this time, including UGS Corp., Computervision, Applicon, and IBM.

- The cost and accessibility of CNC machines emerged in the 1980s with the declining cost of microprocessor-based hardware and the advent of local area network (LAN, a computer network that interconnects with others) systems. By the latter half of the 1980s, minicomputers and mainframe computer terminals were replaced by networked workstations, file servers, and personal computers (PCs), thus getting rid of the university and corporate CNC machines that had traditionally installed them (because they were the only expensive computers that could afford to accompany them).

- In 1989, the National Institute of Standards and Technology, part of the U.S. government’s Department of Commerce, created the Enhanced Machine Controller Project (EMC2, later renamed LinuxCNC), an open-source GNU/Linux software system that uses a general-purpose computer for controlling CNC machines. LinuxCNC paved the way for the future of personal CNC machines, which remain a pioneering application in computing.